söndag, augusti 26, 2007

fredag, augusti 17, 2007

My presentation from JavaBin

If someone is interested in the presentation I did at javaBin in Bergen and Oslo, it can be found here.

torsdag, augusti 16, 2007

An introduction to categories of type systems

Since the current world is moving away from languages in the classical imperative paradigm, it's more and more important to understand the fundamental type differences between programming languages. I've seen over and over that this is still something people are confused by. This post won't give you all you need - for that I recommend Programming Language Pragmatics by Michael L. Scott, a very good book.

Right now, I just wanted to minimize the confusion that abounds surrounding two ways of categorizing programming languages. Namely strong versus weak typing and dynamic versus static typing.

The first you need to know is that these two typings are independent of each other, meaning that there are four different types of languages.

First, strong vs weak: A strongly typed language is a language where a value always have the same type, and you need to apply explicit conversions to turn a value into another type. Java is a strongly typed language. Conversely, C is a weakly typed language.

Secondly, dynamic vs static: A static language can usually be recognized by the presence of a compiler. This is not the full story, though - there are compilers for Lisp and Smalltalk, which are dynamic. Static typing basically means that the type of every variable is known at compile time. This is usually handled by either static type declarations or type inference. This is why Scala is actually statically typed, but looks like a dynamic language in many cases. C, C++, Java and most mainstream languages are statically typed. Visual Basic, JavaScript, Lisp, Ruby, Smalltalk and most "scripting" languages are dynamically typed.

See, that's not too hard, is it? So, when I say that Ruby is a strongly, dynamically typed language, you know what that means?

C is a actually an interesting beast to classify. It's the only weakly, statically typed language I can think of right now. Anyone has any more examples?

To find out more, read the book above, or look up "Type systems" on Wikipedia.

Right now, I just wanted to minimize the confusion that abounds surrounding two ways of categorizing programming languages. Namely strong versus weak typing and dynamic versus static typing.

The first you need to know is that these two typings are independent of each other, meaning that there are four different types of languages.

First, strong vs weak: A strongly typed language is a language where a value always have the same type, and you need to apply explicit conversions to turn a value into another type. Java is a strongly typed language. Conversely, C is a weakly typed language.

Secondly, dynamic vs static: A static language can usually be recognized by the presence of a compiler. This is not the full story, though - there are compilers for Lisp and Smalltalk, which are dynamic. Static typing basically means that the type of every variable is known at compile time. This is usually handled by either static type declarations or type inference. This is why Scala is actually statically typed, but looks like a dynamic language in many cases. C, C++, Java and most mainstream languages are statically typed. Visual Basic, JavaScript, Lisp, Ruby, Smalltalk and most "scripting" languages are dynamically typed.

See, that's not too hard, is it? So, when I say that Ruby is a strongly, dynamically typed language, you know what that means?

C is a actually an interesting beast to classify. It's the only weakly, statically typed language I can think of right now. Anyone has any more examples?

To find out more, read the book above, or look up "Type systems" on Wikipedia.

Etiketter:

programming languages,

type inference,

type systems

Plans - Oslo and Sweden

I'm right now in Bergen, but I'm flying to Oslo later today - I'll basically land and head directly to the javaBin meeting. Afterwards I'm up for beers or something, if someone wants to.

On Friday I'm heading for Sweden. For purely recreational purposes. I'll be in Gothenburg, Malmö and Stockholm for a week or so, so if someone feels like saying hi, do tell. I always like good discussions about programming languages. And other things as well, in fact.

On Friday I'm heading for Sweden. For purely recreational purposes. I'll be in Gothenburg, Malmö and Stockholm for a week or so, so if someone feels like saying hi, do tell. I always like good discussions about programming languages. And other things as well, in fact.

tisdag, augusti 14, 2007

Comprehensions in Ruby

Now, this is totally awesome. Jay found a way to implement generic comprehensions in Ruby. In twenty lines of code. It's really quite obvious when you look at it. An example:

This is actually real fun. You can link how many methods you like - you can send arguments to the methods, you can send blocks to them, you can link and nest however you want. It apples for Arrays, Sets, Hashes - everything you can send a block to can use this, so it's not limited to collections or anything like that.

I think it's really, really cool, and it should be part of Facets, ActiveSupport, hell, the Ruby core library. I want it there.

Now, the only, only, only quibble I have with it... He choose to call it "The Methodphitamine". You should actually use 'require "methodphitamine"'. It's a gem. I love it. But I hate the name. So, read more in his blog, here: http://jicksta.com/articles/2007/08/04/the-methodphitamine.

(1..100).find_all &it % 2 == 0Now, the last two lines are what I like the most. The Symbol to_proc tric is widely used, but I actually think the comprehension version is more readable. It's even better if replacing "map " with "collect".

%w'sdfgsdfg foo bazar bara'.sort_by(&its.length).map &it.reverse.capitalize

%w'sdfgsdfg foo bazar bara'.map &:to_sym

%w'sdfgsdfg foo bazar bara'.map &it.to_sym

This is actually real fun. You can link how many methods you like - you can send arguments to the methods, you can send blocks to them, you can link and nest however you want. It apples for Arrays, Sets, Hashes - everything you can send a block to can use this, so it's not limited to collections or anything like that.

I think it's really, really cool, and it should be part of Facets, ActiveSupport, hell, the Ruby core library. I want it there.

Now, the only, only, only quibble I have with it... He choose to call it "The Methodphitamine". You should actually use 'require "methodphitamine"'. It's a gem. I love it. But I hate the name. So, read more in his blog, here: http://jicksta.com/articles/2007/08/04/the-methodphitamine.

Etiketter:

comprehensions,

methodphitamine,

ruby,

tricks

New double-operators in Ruby

So, I had a great time at the London RUG tonight, and Jay Phillips managed to show me two really neat Ruby tricks. The first one is in this post, and the next one is about the second.

Now, Ruby has a limited amount of operator overloading, but that is in most cases limited by what is predefined. There is actually a category of operators that are not available be the regular syntax, but that can still be created. I'm talking about almost all the regular single operators followed by either a plus sign or a minus sign.

Right now, I don't have a perfect example of where this is useful, but I guess someone will come up with it. You can use it in all cases where you want to be able to use stuff like binary ++, binary --, /-, *+, *-, %-, %+, %^, and so forth.

This trick makes use of the parsing of unary operators combined with binary operators. So, for example, this code:

I'm pretty certain someone kind find a good use for it.

Now, Ruby has a limited amount of operator overloading, but that is in most cases limited by what is predefined. There is actually a category of operators that are not available be the regular syntax, but that can still be created. I'm talking about almost all the regular single operators followed by either a plus sign or a minus sign.

Right now, I don't have a perfect example of where this is useful, but I guess someone will come up with it. You can use it in all cases where you want to be able to use stuff like binary ++, binary --, /-, *+, *-, %-, %+, %^, and so forth.

This trick makes use of the parsing of unary operators combined with binary operators. So, for example, this code:

module FixProxy; endwill actually output "Foo BAR Baz".

class String

def +@

extend FixProxy

end

alias __old_plus +

def +(val)

if val.kind_of?(FixProxy)

__old_plus(" BAR " << val)

else

__old_plus(val)

end

end

end

puts("Foo" ++ "Baz")

I'm pretty certain someone kind find a good use for it.

måndag, augusti 13, 2007

London RUG tonight

Anyone showing up for the London RUG tonight? Seems like it will be interesting: Ruby2Ruby and ParseTree, and also Jay Phillips about Adhearsion (which is really cool).

I will not be presenting, so don't worry about that. =)

Hope to see you there.

I will not be presenting, so don't worry about that. =)

Hope to see you there.

fredag, augusti 10, 2007

ActiveHibernate is happening

So, it seems someone stepped up to the challenge and started working on ActiveHibernate from me initial idea. The code got pushed online a while back and I have finally had the time to look at it. Over all it looks really nice.

Of course, it's very early times. I've submitted some patches making the configuration aspect easier and more AcitveRecord like - but it's going to be important to have access to all the features of Hibernate too. When in the balance, I don't think we should aim for total ActiveRecord equivalence.

Read more here and find the Google Code project here.

I would encourage everyone interested in a merger between Rails and Hibernate to take a look at this. Now is the time to come with advice on how it should work, what it should do and how it should look like.

Of course, it's very early times. I've submitted some patches making the configuration aspect easier and more AcitveRecord like - but it's going to be important to have access to all the features of Hibernate too. When in the balance, I don't think we should aim for total ActiveRecord equivalence.

Read more here and find the Google Code project here.

I would encourage everyone interested in a merger between Rails and Hibernate to take a look at this. Now is the time to come with advice on how it should work, what it should do and how it should look like.

Etiketter:

activehibernate,

hibernate,

jruby,

rails

RailsConf Europe

I am a speaker at RailsConf Europe in Berlin. I'm going to talk about JRuby on Rails at ThoughtWorks. Hopefully many of you'll make it there! As far as I know, it seems the whole core JRuby team may be together for the first time ever, so it's going to be a great conference for JRuby.

I am a speaker at RailsConf Europe in Berlin. I'm going to talk about JRuby on Rails at ThoughtWorks. Hopefully many of you'll make it there! As far as I know, it seems the whole core JRuby team may be together for the first time ever, so it's going to be a great conference for JRuby.See you in Berlin!

Etiketter:

berlin,

jruby,

jruby on rails,

railsconf

torsdag, augusti 09, 2007

NetBeans Ruby support

It's very nice. Each time I get an update from Tor Norbye's blog with screenshots from the NetBeans Ruby support I see a new interesting feature. What I saw this time (here) was that the gotcha from my entry called "Ruby is hard" can now be detected by NetBeans. It's not really too hard, since NetBeans have the parse trees. But it's still a very nice feature to have in your editor.

But I ain't switching from Emacs yet...

But I ain't switching from Emacs yet...

onsdag, augusti 08, 2007

Ruby is hard

I have been doing Ruby programming for several years now, and been closely involved with the JRuby implementation of it for about 20 months. I think I know the language pretty well, and I think I have for a long time. This has blinded me to something which I've just recently started to recognize. Ruby is really hard. It's hard in the same way LISP is hard. But there are also lots of gotchas and small things that can trip you up.

Ruby is a really nice language. It's very easy to learn and get started in, and you will be productive extremely quickly, but to actually master the language takes much time. I think this is underestimated in many circles. Ruby uses lots of language constructs which are extremely powerful, but they require you to shift your thinking - especially when coming from Java, which many current Ruby converts do.

As a small example of a gotcha, what does this code do:

Why does it happen? Well, the problem is this, when the Ruby parser finds a word that begins with an underscore or a lower case letter, and where that word is followed by an equals sign, there is no way for the parser to know if this is a method call (the method foo=) or a variable assignment. The fall back of the parser is to assume it is a variable assignment and let you specify explicitly when it should be a VCall. So what happens in the first example is that a new local variable called "bar" will be introduced and assigned to.

Ruby is a really nice language. It's very easy to learn and get started in, and you will be productive extremely quickly, but to actually master the language takes much time. I think this is underestimated in many circles. Ruby uses lots of language constructs which are extremely powerful, but they require you to shift your thinking - especially when coming from Java, which many current Ruby converts do.

As a small example of a gotcha, what does this code do:

class FooIf your answer is that it prints 42, you're wrong. It will print nil. What's the necessary change to fix this? Introduce self:

attr_accessor :bar

def initialize(value)

bar = value

end

end

puts Foo.new(42).bar

class FooYou could argue that this behavior is annoying. That it's bad. That it's wrong. But that's the way the language works, and I've seen this problem in many code bases - including my own - so it's worth keeping an eye open for it.

attr_accessor :bar

def initialize(value)

self.bar = value

end

end

puts Foo.new(42).bar

Why does it happen? Well, the problem is this, when the Ruby parser finds a word that begins with an underscore or a lower case letter, and where that word is followed by an equals sign, there is no way for the parser to know if this is a method call (the method foo=) or a variable assignment. The fall back of the parser is to assume it is a variable assignment and let you specify explicitly when it should be a VCall. So what happens in the first example is that a new local variable called "bar" will be introduced and assigned to.

JRuby presentation in Bergen

Next Wednesday (the 15th) I will present about JRuby in Bergen. I mentioned this in an earlier post. More information can be found here: http://www4.java.no/web/show.do?page=42;7&appmode=/showReply&articleid=5493.

måndag, augusti 06, 2007

JRuby now JIT-compiles assertions

One of the major problems when running automated testing with JRuby is that all the standard Test::Unit assertions would never be JIT compiled, meaning that they would be quite slow. Actually, assertions seems to be very slow when running interpreted in JRuby. I have a small test case, courtesy of Michael Schubert:

Now, the way the JRuby compiler works, we build it piece by piece and the JIT will try to compile a method that's used enough. If there is any node that can't be compiled it will fail and fall back on interpretation. In the case of assertions, all Test::Unit assertions use a small helper method called _wrap_assertion that looks like this:

require 'test/unit'This code will show quite nicely how large the overhead of asserts are, by using assert_equal. Now, the numbers for MRI for this benchmark looks like this:

require 'benchmark'

class A < Test::Unit::TestCase

[10_000, 100_000].each do |n|

define_method "test_#{n}" do

puts "test_#{n}"

5.times do

puts Benchmark.measure{n.times{assert_equal true,true}}

end

end

end

end

Loaded suite test_assertAnd for JRuby without compilation:

Started

test_10000

0.150000 0.000000 0.150000 ( 0.155817)

0.150000 0.000000 0.150000 ( 0.158376)

0.160000 0.000000 0.160000 ( 0.155575)

0.150000 0.000000 0.150000 ( 0.154380)

0.160000 0.000000 0.160000 ( 0.157737)

.test_100000

1.520000 0.010000 1.530000 ( 1.539325)

1.530000 0.000000 1.530000 ( 1.543889)

1.520000 0.010000 1.530000 ( 1.540376)

1.530000 0.000000 1.530000 ( 1.543742)

1.530000 0.010000 1.540000 ( 1.558292)

.

Finished in 8.509493 seconds.

2 tests, 550000 assertions, 0 failures, 0 errors

Loaded suite test_assertIt's quite obvious that something is very wrong. We're about 2.5-3 times slower.

Started

test_10000

1.408000 0.000000 1.408000 ( 1.408000)

0.582000 0.000000 0.582000 ( 0.582000)

0.425000 0.000000 0.425000 ( 0.426000)

0.419000 0.000000 0.419000 ( 0.418000)

0.466000 0.000000 0.466000 ( 0.467000)

.test_100000

4.189000 0.000000 4.189000 ( 4.190000)

4.196000 0.000000 4.196000 ( 4.196000)

4.139000 0.000000 4.139000 ( 4.139000)

4.165000 0.000000 4.165000 ( 4.165000)

4.162000 0.000000 4.162000 ( 4.162000)

.

Finished in 24.181 seconds.

2 tests, 550000 assertions, 0 failures, 0 errors

Now, the way the JRuby compiler works, we build it piece by piece and the JIT will try to compile a method that's used enough. If there is any node that can't be compiled it will fail and fall back on interpretation. In the case of assertions, all Test::Unit assertions use a small helper method called _wrap_assertion that looks like this:

def _wrap_assertionWhen I started out on this quest, there were two things in this method that doesn't compile. The first is the ||= construct, which I mentioned in an earlier blog post. The problem with it is that it requires that we can compile DefinedNode too, and that one is large. The second problem node is Ensure. After lots of work, I've finally managed to implement most of these safely, and falling back on interpretation when it's not safe. Without further ado, the numbers after compilation with these features added:

@_assertion_wrapped ||= false

unless (@_assertion_wrapped)

@_assertion_wrapped = true

begin

add_assertion

return yield

ensure

@_assertion_wrapped = false

end

else

return yield

end

end

Loaded suite test_assertSo we're looking at over 4 times improvement in speed, and about 33% percent faster than MRI. Try your test cases; hopefully it will show up.

Started

test_10000

0.996000 0.000000 0.996000 ( 1.013000)

0.415000 0.000000 0.415000 ( 0.415000)

0.110000 0.000000 0.110000 ( 0.110000)

0.099000 0.000000 0.099000 ( 0.100000)

0.109000 0.000000 0.109000 ( 0.109000)

.test_100000

1.012000 0.000000 1.012000 ( 1.012000)

1.008000 0.000000 1.008000 ( 1.000000)

1.017000 0.000000 1.017000 ( 1.017000)

1.039000 0.000000 1.039000 ( 1.039000)

1.024000 0.000000 1.024000 ( 1.024000)

.

Finished in 6.966 seconds.

Etiketter:

assertions,

compilation,

jruby,

test/unit

JRuby presentation at javaBin in Norway

Next week I'm coming to Norway to do two presentations at the Java User Groups in Bergen and Oslo. I will visit Bergen on the 15th, and Oslo on the 16th. I will have 2x45 minutes to talk, so there will lots of time for interesting detours, and hopefully some discussion too. If you're in the area, do show up! More information about the Oslo evening can be found here: http://www4.java.no/web/show.do?page=42;7&appmode=/showReply&articleid=5447.

After Norway I'm going to Sweden. I'll be in Gothenburg for a few days, Malmö a few more, and then stop by in Stockholm the 23rd to 26th. I'm not going to present while there, though. It's purely recreational.

After Norway I'm going to Sweden. I'll be in Gothenburg for a few days, Malmö a few more, and then stop by in Stockholm the 23rd to 26th. I'm not going to present while there, though. It's purely recreational.

JRuby is now Java 5+

So, after some discussion on the #jruby IRC channel, the core team has decided to go with a 5+ strategy on trunk. The reasons for this is that almost everyone who commented on the issues advised us to move on, and the features of 5 is quite compelling. We are moving to annotations for describing Java method bindings, we will use enumerations for many values, and we will be able to use the native concurrency libraries. All of these are large wins.

Of course, we are not leaving 1.4 users completely behind. First of all, we provide a Retrotranslator/Retroweaver (we haven't decided which one yet) version, and will continue doing so. Secondly, the 1.0 branch will continue supporting Java 1.4.

This is the current state. First versions of the annotation based method bindings are already in trunk.

Of course, we are not leaving 1.4 users completely behind. First of all, we provide a Retrotranslator/Retroweaver (we haven't decided which one yet) version, and will continue doing so. Secondly, the 1.0 branch will continue supporting Java 1.4.

This is the current state. First versions of the annotation based method bindings are already in trunk.

torsdag, augusti 02, 2007

The pain of compiling try-catch

I've been spending some time trying to implement a compiler for the defined?-feature of Ruby. If you haven't seen it, be happy. It's quite annoying, and incredibly complicated to implement, since you basically need to create a small interpreter especially just for nodes existing within defined?. So why is defined? so important? Well, for one it's actually needed to implement the construct ||= correctly. And that is used everywhere, which means that not compiling it will severely impact our ability to compile code. Also, it just so happens that OpAsgnOrNode (as it's called), and EnsureNode, are the two nodes left to implement to be able to compile Test::Unit assert-methods, since the internal _wrap_assertion uses both ensure and ||=.

So, now you know why. Next, a quick intro to the compilation strategy of JRuby. Basically we try to compile each script and each method into one Java method. We try to use the stack as much as possible, since we in that way can link statements together correctly. And that's about it.

The problem enters when you need to handle exceptions in the emitted Java bytecode. This isn't a problem in the interpreter, since we explicitly return a value for each node, and the interpreter doesn't use the Java stack as much as the compiler does. We also want to be able to use finally blocks at places, especially to ensure that ensure can be compiled down, but also to make the implementation of defined? safe.

So what's the problem? Can't we just emit the catch-table and so on correctly? Well, yes, we can do that. But it doesn't work. Because of a very annoying feature of the JVM. Namely, when a catch-block is entered, the stack gets blown away. Completely. So if the Ruby code is in the middle of a long chained statement, everything will disappear. And what's worse, this will actually fail to load with a Verifier exception, saying "inconsistent stack height", since there will now be one code path with things on the stack, and one code path with no values on the stack, and the way JRuby works, these will end up at the same point later on. And the JVM doesn't allow that either.

This makes it incredibly hard to handle these constructs in bytecode, and frankly, right now I have no idea how to do it. My first approach was to actually create a new method for each try-catch or try-finally, and just have the code in there instead. The fine thing about that is that the surrounding stack will not be blown away since it's part of the invoking method, and not in the current activation frame. And that approach actually works fairly well. Until you want to refer to values from outside from the try or catch block. Then it breaks down.

So, right now I don't know what to do. We have no way of knowing at any specific place how low the stack is, so it's not possible to copy it somewhere, and then restore it in the catch block. That would be totally inefficient too. In fact, I have no idea how other implementations handle this. There's gotta be a trick to it.

So, now you know why. Next, a quick intro to the compilation strategy of JRuby. Basically we try to compile each script and each method into one Java method. We try to use the stack as much as possible, since we in that way can link statements together correctly. And that's about it.

The problem enters when you need to handle exceptions in the emitted Java bytecode. This isn't a problem in the interpreter, since we explicitly return a value for each node, and the interpreter doesn't use the Java stack as much as the compiler does. We also want to be able to use finally blocks at places, especially to ensure that ensure can be compiled down, but also to make the implementation of defined? safe.

So what's the problem? Can't we just emit the catch-table and so on correctly? Well, yes, we can do that. But it doesn't work. Because of a very annoying feature of the JVM. Namely, when a catch-block is entered, the stack gets blown away. Completely. So if the Ruby code is in the middle of a long chained statement, everything will disappear. And what's worse, this will actually fail to load with a Verifier exception, saying "inconsistent stack height", since there will now be one code path with things on the stack, and one code path with no values on the stack, and the way JRuby works, these will end up at the same point later on. And the JVM doesn't allow that either.

This makes it incredibly hard to handle these constructs in bytecode, and frankly, right now I have no idea how to do it. My first approach was to actually create a new method for each try-catch or try-finally, and just have the code in there instead. The fine thing about that is that the surrounding stack will not be blown away since it's part of the invoking method, and not in the current activation frame. And that approach actually works fairly well. Until you want to refer to values from outside from the try or catch block. Then it breaks down.

So, right now I don't know what to do. We have no way of knowing at any specific place how low the stack is, so it's not possible to copy it somewhere, and then restore it in the catch block. That would be totally inefficient too. In fact, I have no idea how other implementations handle this. There's gotta be a trick to it.

Etiketter:

compilation,

defined?,

inconsistent stack height,

jruby

onsdag, augusti 01, 2007

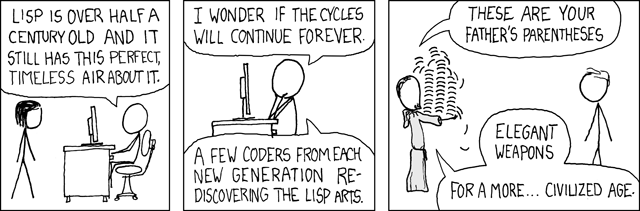

I love XKCD

Prenumerera på:

Inlägg (Atom)